My First Experience Coding With Terraform

As a traditional ARM template user and writer. Here is my experience for working with Terraform for the first time.

My background

Over the past four years when deploying cloud infrastructure, I have always worked with/written ARM templates (Azure Resource Manager). I have worked at companies where .NET development is very prevalent and Microsoft products were heavily purchased.

Earlier this year, I took a new job and it required different thinking and logic on how to apply and recommend tool sets due to the new company’s size (it is much larger than my previous employer).

There are many studies that show this approach makes for a happier and more productive teams. I think both Accelerate and The DevOps Handbook show this statistic and probably many more books.

When I had a team select Terraform, I was excited

I had worked with Packer in the past, which is made by HashiCorp, the same company that makes Terraform. I had a good experience working with Packer, so I had some background on how documentation was written and places to look if I got stuck. So…I started searching…

No environment variables, what!

One of the first things I learned was that Terraform files do not accept environment variables directly like a Packer file can.

Additionally, you must have a file called variables.tf which lists all variables to get passed into your main infrastructure deployment file(s). Again, the variables.tf should have all variable names, but not necessarily their values, which will get passed into your main file(s). _You probably don’t want to hardcode values for variables, especially secret things.

The variables.tf file will look something like this:

The location variable has a default value, but as you can see the other variables here don’t. This is just a small snippet of the variables.tf file, it is not the entire variables.tf that is used for deployment.

Now you also need a main infrastructure deployment file, where you describe what is going to be deployed. This can be handled a few different ways.

If you have a very small system, you may just want a file called main.tf which holds the description of all your infrastructure.

If you have a larger system or a distributed system, you may want to break up your main files by component. That would mean you could have files called web.tf and database.tf in a system. If you’ve worked with ARM templates previously, this is sort of like a linked arm template.

As long as your main file(s) are in the same directory, Terraform just knows to deploy them, you don’t have to specify a path (you can specify a path if you need to though!).

how do we get started with our main file(s)?

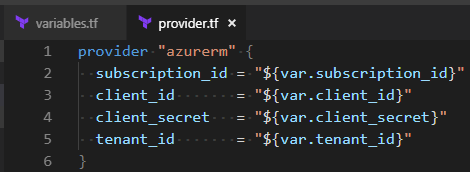

First, we need to specify the cloud platform provider, this can be done in the main file itself or in a file called provider.tf

I chose to put my azure creds in a separate file. This is just preference though; the very important part is to ensure your variables for your azure service principal account are called:

- client_id

- client_secret

- tenant_id

If you try to call them anything different your deployment will fail.

Terraform does cool stuff for us in the background when we call it, but it also restricts us a bit since it does a lot of abstraction for us.

It took me a while to figure this issue out…the silliest errors take the longest to find, right? My provider.tf file looks like this, these lines of code can also be at the top of your main.tf as well

Note that everything is a variable, and nothing is hardcoded.

This helps make code extensible and easily transferable to other teams. There are cases where it is super hard to do this or in a rare case you may not want to make your code extensible, but why not help another team out?

The Main Event

Our main.tf file, Terraform itself, is not super hard to learn. The documentation from HashiCorp is decent, but it could be improved by having more examples.

A simple block to create a resource group looks like this:

The name of the resource group will be the variable and the testing-terraform is just metadata, you can also put your variable there though too.

This is MUCH shorter than what one would see an ARM template for defining resources. Which is cool right, who doesn’t prefer writing less code? Be warned though, it will come with certain sacrifices. Let’s discuss.

Sacrifice #1: No API Versions

If you’ve worked with ARM templates in the past, you’ll notice there is not an API version listed.

In the small research and discovery, I’ve done with Terraform, I haven’t seen a way to pass am API version for each resource…remember each resource in Azure has its own API version (i.e. compute vs. a key vault creation will be different API versions). If your infrastructure is using older Azure features or rarely gets updated, you probably don’t care that you aren’t specifying the API version

If it is possible to specify API versions please leave a comment with a link or example!

Sacrifice #2: Portal Shows “No Deployments”

When deploying actual ARM templates, the Azure portal lets us know a deployment is in progress but Terraform deployments do not show this.

They manage the state of your infrastructure in a totally different way than Azure ARM templates. Terraform will create a state file as it is deploying which holds the description of all your resources.

I won’t get into this in too much detail because there are a lot of articles on the interwebz about it. Just know that this state file holds sensitive secrets, but if you don’t keep it properly the state of your entire infrastructure will be at risk.

Sacrifice #3: Management Of Variables Via Orchestration

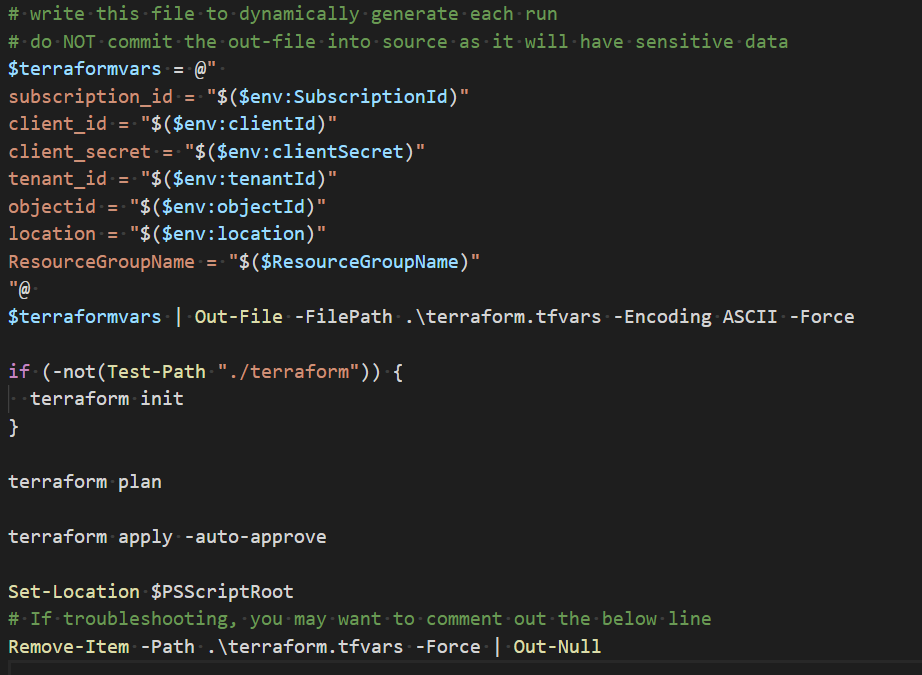

Earlier I mentioned you cannot pass environment variables directly into your main.tf file and you must also have a variables.tf file which defines all your variables. Again, we don’t want to hardcode things so how can we pass in values?

This becomes an even larger question if we need the ability to get the value or something already deployed and pass that into our template or follow naming conventions that we dynamically generate. The only way I found around this issue is having to write a temp file then delete that file. So I basically use a PowerShell script to orchestration all this stuff for me. Yeah this sucks. Sucks a lot. Especially since Packer can accept environment variables directly.

I am using Terraform v0.11.10 while writing this post and I hope in a future release they make it as easy as Packer so no file needs to be written. Essentially a sucky work around this like for now will be fine but ensure you have the terraform.tfvars file in your git ignore!

Notice that I have to run a terraform init, this is to initialize the Azure provider for my deployment. If we don’t initialize it, you’ll see a pretty straight forward error. If you ever check your Terraform version you’ll notice you get a Terraform version and a provider version (they are separate).

Some people may also tsk-tsk at the auto-approve but that is what pull requests are for, right? 😉 Even though we are generating a file called terraform.tfvars we still need our variables.tf, always! This file must also be called terraform.tfvars for that Terraform magic to work without any

Now I’m scared, why should I use Terraform?

It seems like a lot manage. If you think about ARM templates, a lot of people use a parameters file instead of a parameters object (I’ll save that rant for another post).

So essentially you can have as few as two files to manage. In my opinion, Terraform does an awesome job of keeping it simple. I could really see anyone new to cloud infrastructure automation being able to write Terraform.

Kewlness #1: It’s Simple

Abstraction is a great thing when done properly. So many configuration as code platforms and IaC tools, they are based in a traditional programming language, but to write code in them, you just need to know the abstraction.

All that crazy hardness of programming kind of goes away, until you want to do something a bit crazy. Then you need to know more in-depth techniques, but you’ll be way less afraid of learning them because you’ve built confidence. Since most tools are open source tool, you can also go look at Github for the source code of your favorite tool to learn more about how that company uses the traditional programming language.

The other cool thing Terraform does for us is that is just “knows” about its files assuming you’ve followed their conventions and are in the same directory.

Then we can just run terraform plan and terraform apply and boom there goes my infrastructure. If you don’t keep all terraform code in the same directory, you may need to give terraform the path to it.

In a 105 lines of code with Terraform I can create a resource group, deploy a key vault with N number of secrets, deploy N network interfaces, an availability set, and N virtual machines. Which brings me to my favorite thing about Terraform.

Kewlness #2: Loops

Have you ever written loops in an ARM template? They are a pain and come with silly error messages if you’ve messed them up.

In Terraform however, they are much easier. Let me do my best to explain.

Terraform will take my list of web_secret_names and just loop though however many items are in my list. Remember we still must define web_secret_names in our variables.tf and it must be of type list for it to be used in loops.

Notice again, we don’t give it any values. All the values will be generated (see sacrifice #3). Additionally, my web_secret_values must also be a variable defined as type list.

Our count_index will correspond with each value in the list. You could also just have your count be a set number (like 2 or 4, etc) but why do that?? It is much cooler to allow for any integer value!

This is very powerful because we could deploy a lot of resources with very little code. You can loop on any variable that is defined as type list. Remember you also have the power to change the type so something that may have previously been a string can easily become a list.

So, in 6 lines of code from my main.tf I can deploy an endless amount of key vault secrets. Pretty kewl.

Kewlness #3: It’s One Language For Many Cloud Platforms

While we hear cloud agnostic tossed around so much, I’m not sure I would agree with the many people that say Terraform is cloud agnostic.

What I do agree with is the Terraform language is the same for any cloud, but your main.tf file will always be different from one provider to the next.

For example, we could not take our coding examples above and deploy them in Google Cloud or AWS. However, we can probably easily translate the main.tf file because we already know how to write Terraform.

You could see the advantage to this if you have services in many cloud providers so do don’t have people learning Azure, AWS and Google Cloud specific languages, instead they learn Terraform and now can read the infrastructure deployments for all cloud providers.